Generating Word embeddings is one of the techniques in Natural Language Processing (NLP) where the words are converted into vectors of real numbers to feed them into models built for custom tasks as input features. Word embeddings can capture the context of a word in a document, semantic similarity, relation with other words, etc. Every unique word have a unique embedding (or "vector") and these embeddings captures the meaning of words. There are various techniques for generating word embeddings. Most popular ones are Bag of Words, TF-IDF, Word2Vec, GloVe, BERT, GPT-2, etc. Basically there are three main categories - Context independent, context aware and large model. This post is about generating word embeddings using BERT. But first of all what is BERT and why are we using BERT.

More about Word Embeddings

Tokenization - Tokenization is a technique of separating a piece of text into smaller units called tokens. This is important as the processing of the raw text happens at token level. There are broadly three classifications of tokens - word, character, and subword.

Encoding - Text Encoding is a process to convert raw text into vectors/number representations so as to preserve the context of the word or meaning of sentences because machines can't process raw text.

Sentence embeddings - While generating sentence embeddings, there is only one embedding for the whole sequence instead of separate embeddings for separate tokens.

Pooling - The process of converting a sequence of embeddings into a sentence embedding is called pooling. This can be achieved by adding a pooling layer which performs some type of aggregate functions on the top of language model.

What is BERT

BERT stands for Bidirectional Encoder Representations from Transformers. It is a transformer based model which was released by Google in the late 2018. It can either be used to extract high quality language features from text data or can fine-tune these models on a specific task with your own data.

BERT MODEL

First, let's look into BERT's architecture a bit to get a more broader understanding of how BERT is trained for word encoding. There are two types of models which were released -

BERT-Base - 12-layer, 768-hidden-nodes, 12-attention-heads, 110M parameters

BERT-Large - 24-layer, 1024-hidden-nodes, 16-attention-heads, 340M parameters

Basically from a very high-level perspective, BERT’s architecture (BASE model) consists of only encoders because it's goal was to generate a language representation model.

The input to the encoder for BERT is a sequence of tokens, which are first converted into vectors and then processed in the neural network. But before processing can start, BERT needs the input to be in a format which consists of Token embeddings, Segment embeddings and Positional embeddings.

The main idea behind training is masking a few words in a sentence and tasking the model to predict the masked words. And as the model trains to predict, it learns to produce a powerful internal representation of words as word embedding.

Why Use BERT

As mentioned above, the techniques used for generating word embeddings such as Word2Vec, TF-IDF, etc are context independent means they just generate a vector representation of the words without capturing the actual meaning and context of that word. The most famous example of this is using same word in two different contexts.

“That man robbed a bank.”

“The man went fishing by the bank of the river.”

Word2Vec will create the same embedding for the word bank in both the sentences but If the embeddings are generated using BERT, they will be different for both words as it will capture the context of that word. This gives BERT an advantage over other methods as contextually generated embeddings are more useful than fixed representation of words.

Implementation

Loading pre-trained BERT

Huggingface is a popular library which contains inferences of pretrained models. For using BERT, Install the pytorch interface for BERT by Hugging Face. Firstly, install the library.

!pip install transformers

Now let’s import pytorch and BERT tokenizer. There are few variations of BERT available but we'll be using the BERT-Base and will ignore casing so "uncased".

import torch

from transformers import BertTokenizer

# Load pre-trained model tokenizer

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

Format the Input

BERT requires the input in a special format which uses two types of tokens - [SEP] and [CLS]. The [SEP] token is used to separate the sentences and the [CLS] token appears at the start of the text, mainly used for classification tasks. Now, let's take a text and convert it to the specified input.

text = "This post is about generating word embeddings using BERT"

marked_text = "[CLS] " + text + " [SEP]"

# Tokenize the sentence with the BERT tokenizer.

tokenized_text = tokenizer.tokenize(marked_text)

#mapping the tokens with their indexes in vocabulary

indexed_tokens = tokenizer.convert_tokens_to_ids(tokenized_text)

# Print out the tokens.

print (tokenized_text)

Output:

['[CLS]', 'this', 'post', 'is', 'about', 'generating', 'word', 'em', '##bed', '##ding', '##s', 'using', 'bert', '[SEP]']

As shown above, BERT tokenizer is used to tokenize the text. The output contains the tokens as well as words but some words are starting with #. This is because there is a vocabulary which the tokenizer checks to generate tokens. As the vocabulary is limited, if the whole word is not found it will create the token of the subword with #. This will retain the contextual meaning of the word as "bed" token and "##bed" token will be different.

Now, let's generate Segment Id's. Firstly, what's a segment id? Basically, BERT expects sentence pairs, using 1s and 0s to distinguish between the two sentences. So, for the whole tokenized text, we have to specify which sentence it belongs to.

#Marking all the tokens to a single sentence

segments_ids = [1] * len(tokenized_text)

print (segments_ids)

Now, before feeding both the token indexes as well as segment id's into our model to generate embeddings, we'll convert both into torch tensors.

# Convert inputs to PyTorch tensors

tokens_tensor = torch.tensor([indexed_tokens])

segments_tensors = torch.tensor([segments_ids])

Import the pretrained model using the code below and put the model in the evaluation mode. This will output the definition of the BERT model.

model = BertModel.from_pretrained('bert-base-uncased',

output_hidden_states = True,

)

model.eval()

NOTE - The output is a bit long so can't post the screenshot.

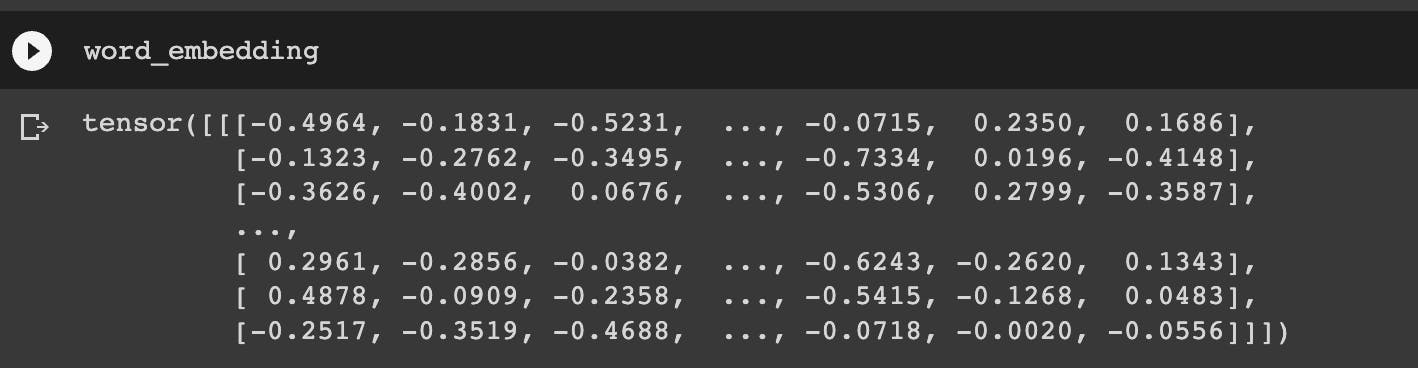

Now, we'll store the output of our model and also the hidden states. As we have passed output_hidden_states = True in our model, the third element in the output will give the hidden states. But the output is a little confusing. The hidden_states object have four dimensions - Layer number, batch number, word/token number and hidden units.

with torch.no_grad():

outputs = model(tokens_tensor, segments_tensors)

hidden_states = outputs[2]

We still haven't got the actual embeddings. To get that, we'll have to concatenate the last four layers which will give us a single word vector per token. Each vector will have a length 4 x 768 = 3,072.

word_embedding = torch.cat([hidden_states[i] for i in [-1,-2,-3,-4]], dim=-1)

Output: